Introduction

Another role of the Azure Data Insights & Analytics team is building and maintaining the internal analytics and reporting for Azure Data. My job is to develop, maintain and optimize the Semantic Models that support our reporting. In order to monitor and audit our models’ performance, we developed a tool called Semantic Model Audit, which is now available in the Fabric Toolbox open-source GitHub repo.

Overview

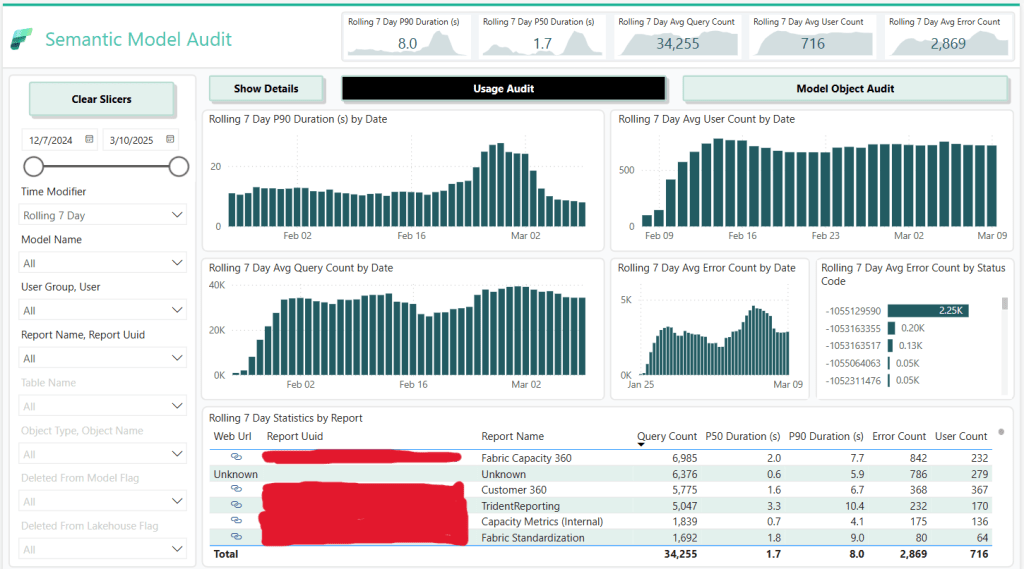

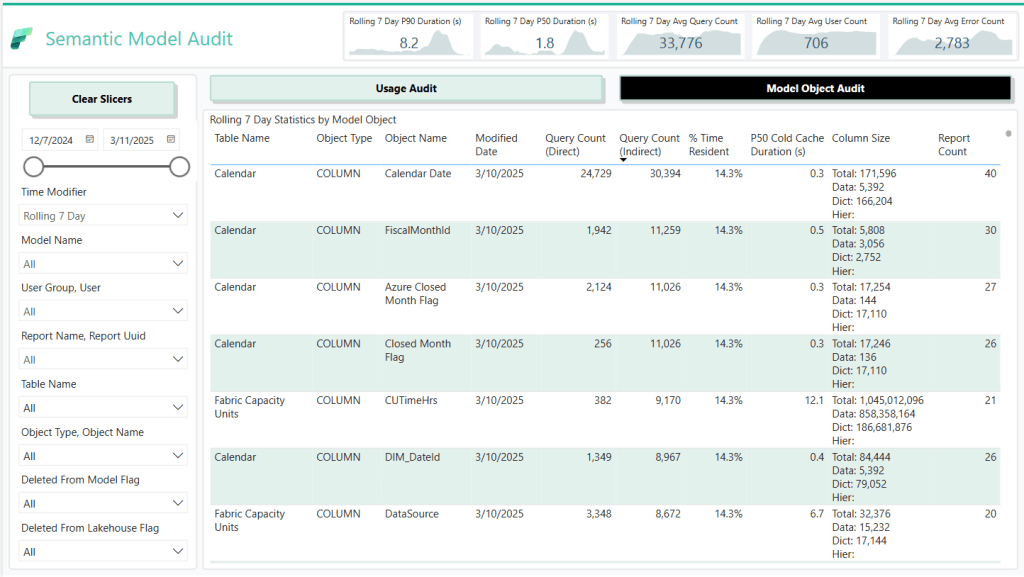

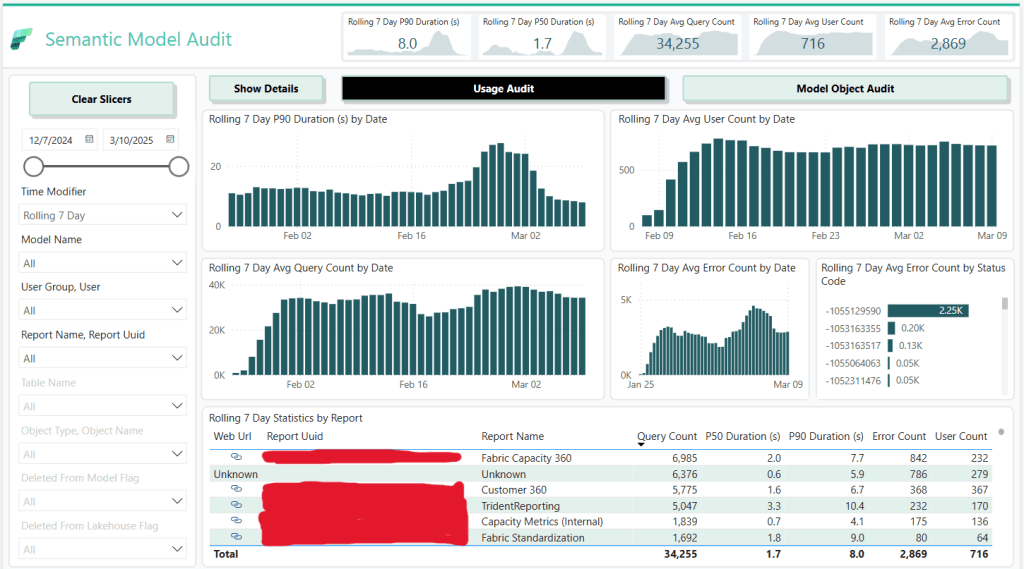

This tool is designed to provide a comprehensive audit of your Fabric semantic models.

The tool consists of three main components:

- The Notebook:

- Captures model metadata, query logs, dependencies, unused columns, cold cache performance, and resident statistics.

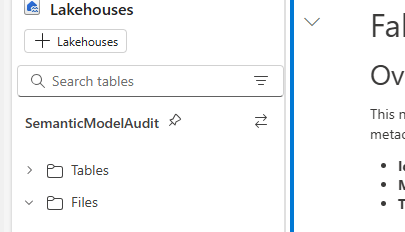

- Generates star schema tables (DIM_ModelObject, DIM_Model, DIM_Report, DIM_User, FACT_ModelObjectQueryCount, FACT_ModelLogs, FACT_ModelObjectStatistics) stored in a lakehouse.

- Includes robust error handling, scheduling, and clean-up functions to support continuous monitoring.

- The Power BI Template (PBIT File):

- The PowerPoint File:

- Contains the background images and design elements used in the Power BI template.

Requirements

- Workspace Monitoring:

- Ensure that Workspace Monitoring is enabled in your Fabric environment.

- Refer to this blog post for setup guidance.

- Scheduled Execution:

- Schedule the notebook to run several times a day (e.g., six times) for detailed historical tracking.

- Update run parameters (model names, workspaces, logging settings) at the top of the notebook.

- Lakehouse Attachment:

- Attach the appropriate Lakehouse in Fabric to store logs and historical data in Delta tables.

Key Features

- Model Object & Metadata Capture:

- Retrieves and standardizes the latest columns and measures using Semantic Link and Semantic Link Labs.

- Captures dependencies among model objects to get a comprehensive view of object usage.

- Query Log Collection:

- Captures both summary query counts and detailed DAX query logs.

- Unused Column Identification:

- Compares lakehouse and model metadata to identify unused columns in your model’s source lakehouse.

- Removing unused columns will result in greater data compression and performance.

- Cold Cache & Resident Statistics:

- Deploys a cloned model to measure cold cache performance.

- Records detailed resident statistics (e.g., memory load, sizes) for each column.

- Star Schema Generation:

- Produces a set of star schema tables, making it easy to integrate with reporting tools.

- Integrated Reporting Assets:

- Power BI Template (PBIT): Quickly generate an interactive report from the captured data.

- PowerPoint File: Provides background images and design elements used in the report.

Why Use This Notebook?

- Consistent Testing: Automates cache clearing and capacity pausing for reliable comparisons.

- Scalable: Run as many queries as you want, any number of times as needed against your models and track each attempt.

- Centralized Logs: All results are stored in a Lakehouse for easy analysis.

- Versatility: Some use cases include: testing different DAX measure versions, comparing the impact of model changes on DAX performance, comparing performance across storage modes, etc.

Getting Started

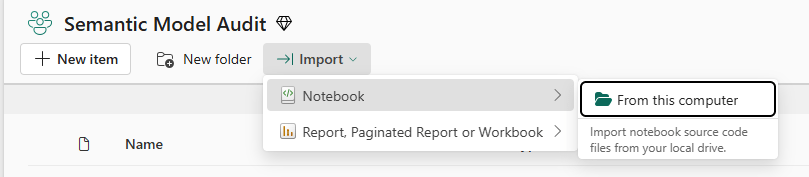

Download the notebook from GitHub and upload to a Fabric workspace.

Attach a Lakehouse that will be used to save the logs.

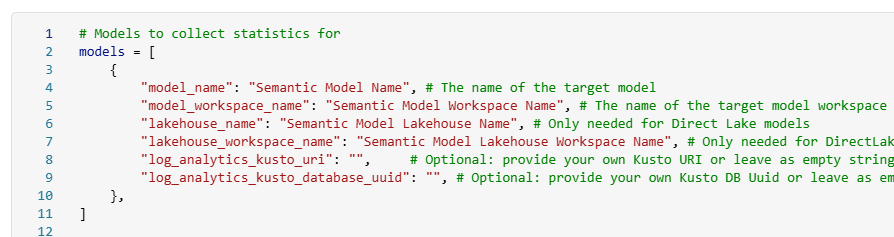

Update the list of models you want to audit.

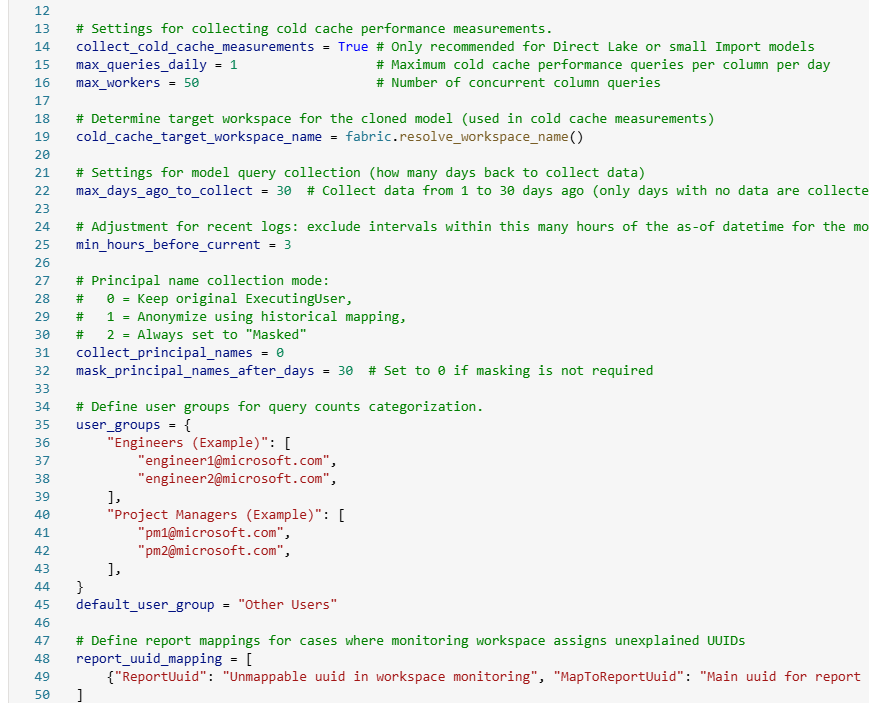

Configure the rest of the settings in the config cell. There are a lot of options, so read carefully. 🙂

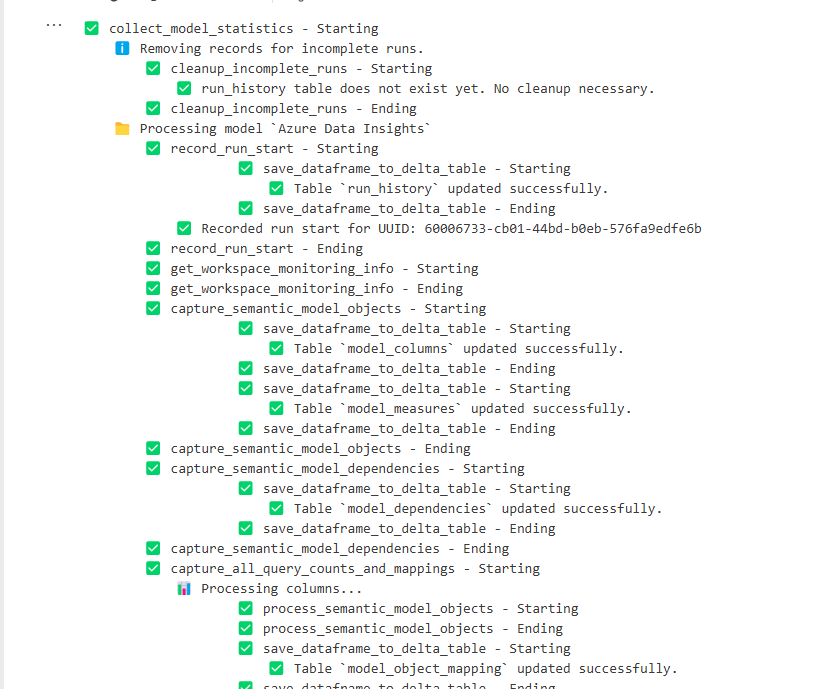

Run the notebook and collect the logs. Under the collect_model_statistics() cell, you can track along with the testing if you want to understand what is happening.

After the first run has finished, download the PBIT file and connect to your lakehouse.

Conclusion

I hope you find this notebook helpful.

Like always, if you have any questions or feedback, please reach out. I’d love to hear from you!

Leave a reply to Useful community tools and resources for Power BI and Fabric Cancel reply